Amazon Web Services (AWS) and NVIDIA have expanded their partnership to provide cutting-edge infrastructure, software and services to drive advancements in generative AI for their customers.

This enhanced collaboration combines NVIDIA’s latest multi-node systems, including next-gen GPUs, CPUs and AI software, with AWS Nitro System, Elastic Fabric Adapter (EFA) interconnect, and UltraCluster scalability.

The move is in response to escalating demands of generative AI applications for tools and performance capabilities ideal for training foundation models and fostering the development of generative AI across various industries.

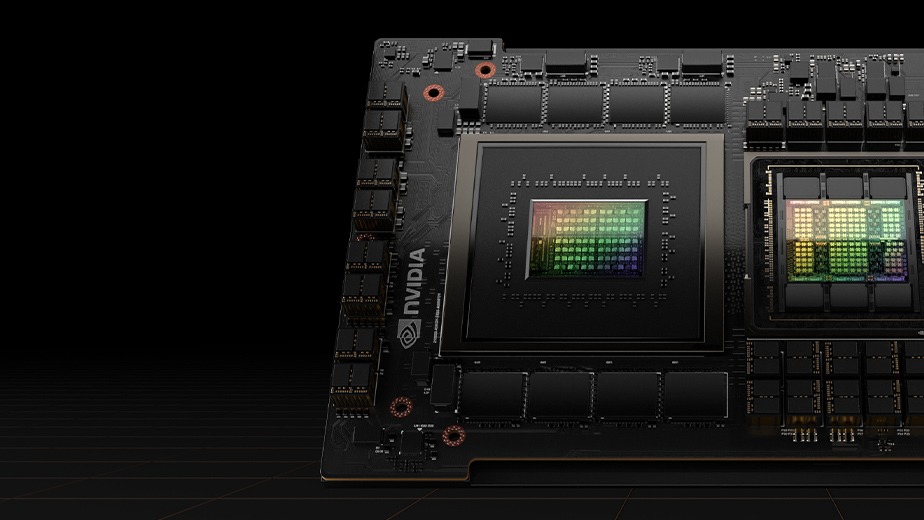

With the collaboration, AWS becomes the first cloud provider to introduce NVIDIA GH200 Grace Hopper Superchips with new multi-node NVLink technology to its platform. The NVIDIA GH200 NVL32 multi-node platform connects 32 Grace Hopper Superchips with advanced NVIDIA NVLink and NVSwitch technologies into a single instance available on Amazon Elastic Compute Cloud (Amazon EC2). Supported by AWS’ robust networking capabilities, advanced virtualisation (AWS Nitro System), and hyper-scale clustering (Amazon EC2 UltraClusters), this allows joint customers to scale up to thousands of GH200 Superchips.

NVIDIA DGX Cloud, NVIDIA’s AI-training-as-a-service, will be hosted on AWS. Featuring GH200 NVL32, this deployment offers developers access to the largest shared memory in a single instance, accelerating the training of cutting-edge generative AI models and large language models (LLMs).

NVIDIA and AWS are working together on Project Ceiba, designing an AI supercomputer equipped with GH200 NVL32 and Amazon EFA interconnect. This supercomputer, featuring 16,384 NVIDIA GH200 Superchips and capable of processing 65 exaflops of AI, will fuel NVIDIA’s next wave of generative AI innovation.

AWS has unveiled three new Amazon EC2 instances – P5e instances powered by NVIDIA H200 Tensor Core GPUs, and G6 and G6e instances featuring NVIDIA L4 GPUs and NVIDIA L40S GPUs respectively. Utilising NVIDIA’s Omniverse platform, these instances cater to diverse applications such as AI fine-tuning, inference, graphics, video workloads, 3D workflows, and digital twins.

“AWS and NVIDIA have collaborated for more than 13 years, beginning with the world’s first GPU cloud instance. We continue to innovate with NVIDIA to make AWS the best place to run GPUs, combining next-gen NVIDIA Grace Hopper Superchips with AWS’s EFA powerful networking, EC2 UltraClusters’ hyper-scale clustering, and Nitro’s advanced virtualisation capabilities,” said Adam Selipsky, CEO of AWS.

According to Jensen Huang, Founder and CEO of NVIDIA, generative AI is transforming cloud workloads and putting accelerated computing at the foundation of diverse content generation.

“Driven by a common mission to deliver cost-effective state-of-the-art generative AI to every customer, NVIDIA and AWS are collaborating across the entire computing stack, spanning AI infrastructure, acceleration libraries, foundation models, to generative AI services,” he said.