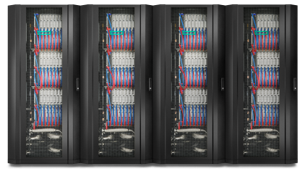

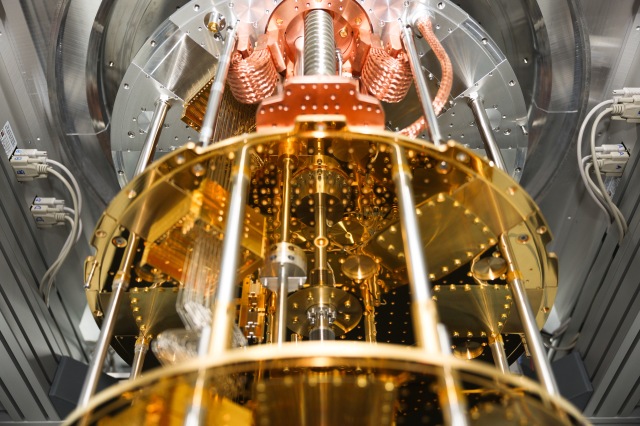

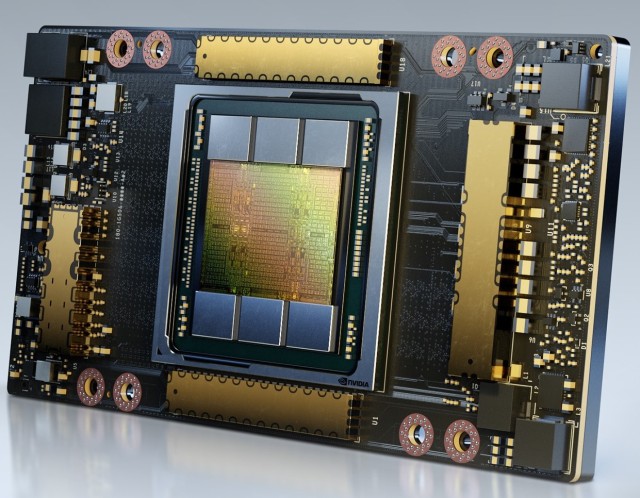

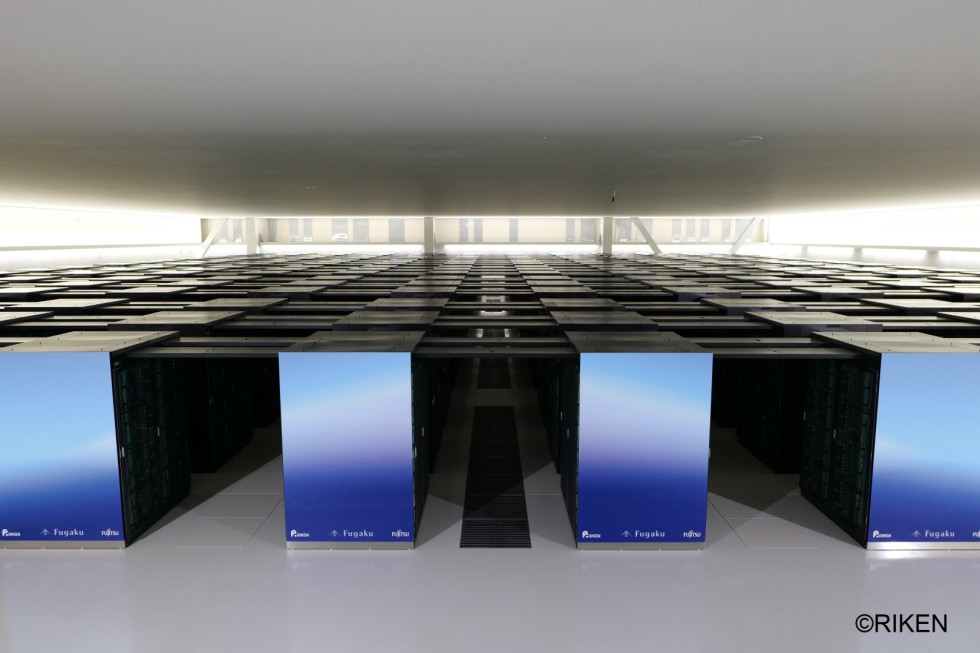

Japan’s new ABCI-Q supercomputer will be powered by NVIDIA platforms for accelerated and quantum computing. Designed to advance the nation’s quantum computing initiative, ABCI-Q will enable high-fidelity quantum simulations for research across industries. The supercomputer […]