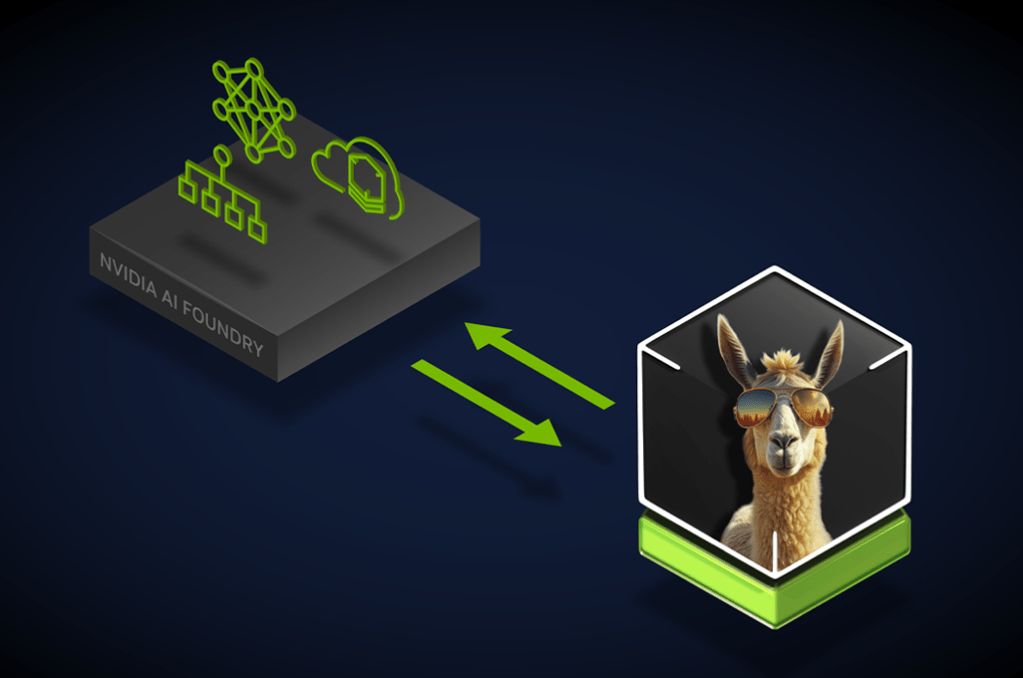

NVIDIA has introduced new AI Foundry service and NVIDIA NIM inference microservices that transforms generative AI landscape for enterprises worldwide. Dovetailing with the launch of Meta’s Llama 3.1 collection of open-source models, this combination marks a major step up in AI accessibility and customisation.

The AI Foundry service empowers enterprises and nations to create “supermodels” tailored to their specific industry needs. It utilises the NVIDIA NeMo platform, including the top-ranked NVIDIA Nemotron-4 340B Reward model, to enhance model creation and customisation.

Leveraging Llama 3.1 alongside NVIDIA’s software, computing resources, and expertise, enterprises can now train these models using both proprietary and synthetically generated data.

For organisations developing sovereign AI strategies, this allows them to build custom large language models that reflect their unique business requirements or cultural nuances.

“Llama 3.1 opens the floodgates for every enterprise and industry to build state-of-the-art generative AI applications. NVIDIA AI Foundry has integrated Llama 3.1 throughout and is ready to help enterprises build and deploy custom Llama supermodels,” said Jensen Huang, founder and CEO of NVIDIA.

According to Mark Zuckerberg, Founder and CEO of Meta, the new Llama 3.1 models are a super-important step for open source AI.

“With NVIDIA AI Foundry, companies can easily create and customise the state-of-the-art AI services people want and deploy them with NVIDIA NIM,” he said.

NVIDIA and Meta have also collaborated on a distillation recipe for Llama 3.1 to enable developers to create smaller custom models suitable for a broader range of infrastructure, including AI workstations and laptops.

NIM available for download

To facilitate rapid deployment, NVIDIA has made NIM inference microservices for Llama 3.1 models available for download. These microservices offer up to 2.5 times higher throughput compared to standard inference methods to significantly boost production efficiency.

NVIDIA NIM partners providing enterprise, data and infrastructure platforms can now integrate the new microservices in their AI solutions to supercharge generative AI for more than five million developers and 19,000 startups in the NVIDIA community.

Production support for Llama 3.1 NIM and NeMo Retriever NIM microservices is available through NVIDIA AI Enterprise. Members of NVIDIA Developer Program will soon be able to access NIM microservices for free for research, development and testing on their preferred infrastructure.