NVIDIA and Google Cloud are collobaborting to bring agentic AI capabilities to enterprises while ensuring data security and regulatory compliance.

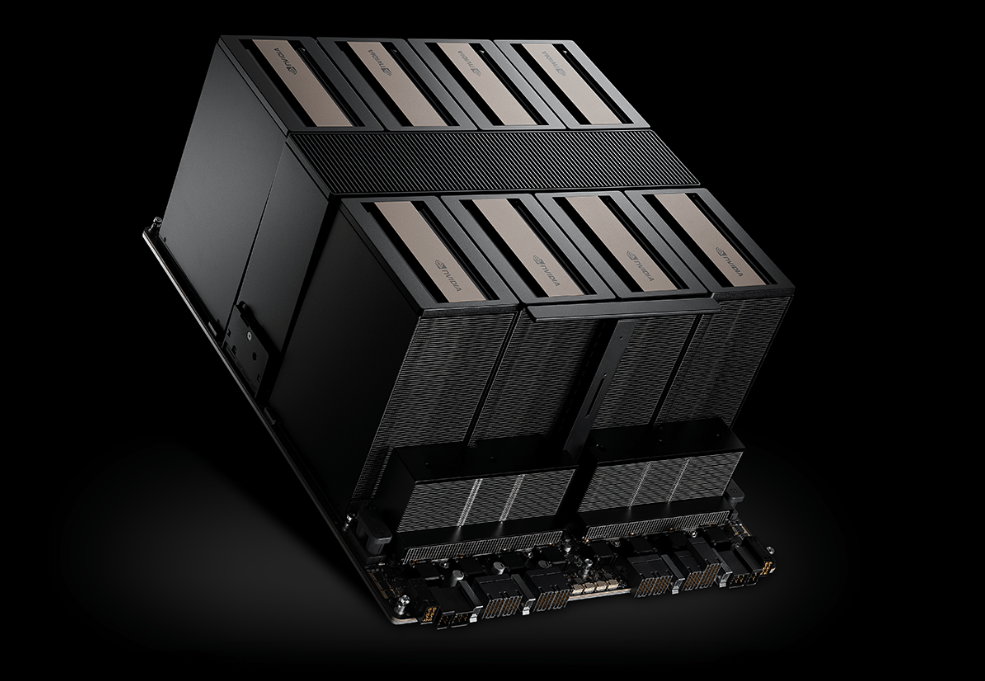

The partnership integrates Google’s Gemini family of AI models with NVIDIA’s Blackwell HGX and DGX platforms, leveraging NVIDIA Confidential Computing to protect sensitive information.

By deploying Gemini models on-premises through Google Distributed Cloud, enterprises can maintain control over sensitive data, such as patient records or financial transactions, while benefiting from the advanced reasoning capabilities of agentic AI.

NVIDIA Confidential Computing ensures that both user prompts and fine-tuning data remain secure, preventing unauthorised access or tampering.

“By bringing our Gemini models on premises with NVIDIA Blackwell’s breakthrough performance and confidential computing capabilities, we’re enabling enterprises to unlock the full potential of agentic AI,” said Sachin Gupta, Vice President and General Manager of Infrastructure and Solutions at Google Cloud.

As the next frontier in artificial intelligence, agentic AI systems can reason, adapt and make decisions autonomously. Unlike traditional AI models that rely on static knowledge, agentic AI systems excel in dynamic environments. For example, in IT support, these systems can diagnose issues and execute fixes autonomously. In finance, they can proactively block fraudulent transactions or adjust fraud detection rules in real-time.

Google Cloud is also enhancing its infrastructure to support agentic AI at scale. The newly announced GKE Inference Gateway integrates with NVIDIA Triton Inference Server and NeMo Guardrails to optimise AI workload deployment while ensuring centralised model security.

Additionally, Google is working on observability tools such as NVIDIA Dynamo to monitor and scale reasoning models effectively.