NVIDIA continued its dominance of MLPerf Traning benchmarks with its new Blackwell architecture delivering a record-breaking performance in the latest tests.

The latest results showed Blackwell outpacing all competitors across every MLPerf Training v5.0 benchmark, including the most demanding large language model (LLM) tests.

Blackwell delivered 2.2 times the performance of the previous generation at the same scale on the Llama 3.1 405B pre-training benchmark. On the Llama 2 70B LoRA fine-tuning benchmark, NVIDIA’s DGX B200 system, equipped with eight Blackwell GPUs, achieved 2.5 times the performance compared to the prior round’s equivalent system.

NVIDIA’s AI platform was the only one to submit results for every benchmark, demonstrating its versatility and leadership across a wide range of AI workloads — from LLMs and recommendation systems to object detection and graph neural networks.

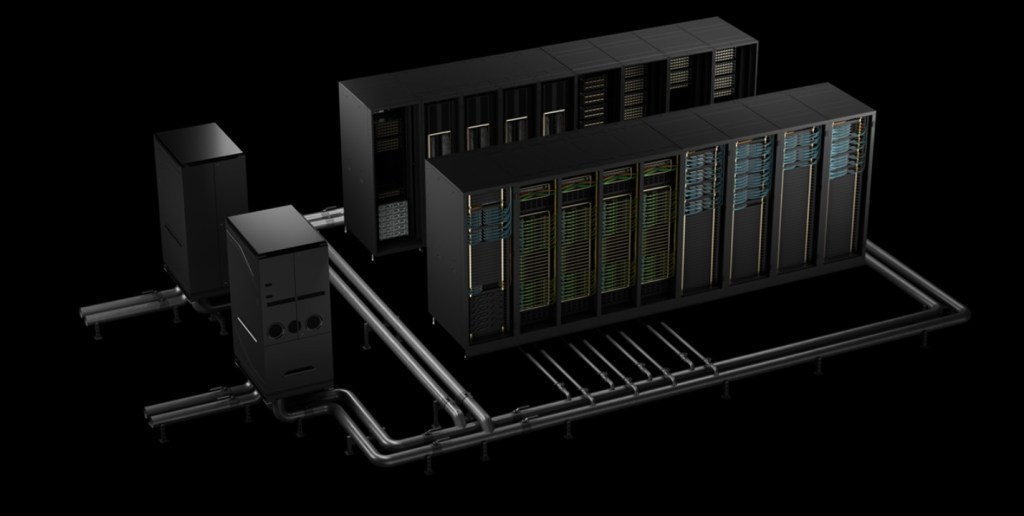

Powered by the Blackwell platform, its Tyche and Nyx AI supercomputers led the charge with additional results submitted in collaboration with CoreWeave and IBM, utilising a total of 2,496 Blackwell GPUs and 1,248 Grace CPUs.

The MLPerf results highlight NVIDIA’s ongoing investment in software innovation, with the NeMo Framework and CUDA-X libraries playing a pivotal role in accelerating AI training and deployment.

Widespread adoption

The extensive participation of partners such as ASUS, Cisco, Dell Technologies, Google Cloud, Hewlett Packard Enterprise, Lenovo, Oracle Cloud Infrastructure, and Supermicro shows the widespread adoption of NVIDIA’s AI platform across the industry.

NVIDIA’s latest advancements with Blackwell also speeds up the creation of agentic AI-powered applications — systems that can reason and make decisions on their own. These cutting-edge applications are expected to fuel the next wave of innovation across a wide range of industries, including finance, healthcare and manufacturing.