AMD has unveiled a vision for an open, integrated AI ecosystem at its Advancing AI 2025 event in San Jose, where it also introduced new silicon, softwar, and rack-scale systems designed to accelerate the next wave of AI innovation.

It showcased its new Instinct MI350 Series GPUs, next-generation Helios rack-scale AI platform, and the ROCm 7 open-source software stack, all aimed at delivering unmatched performance, flexibility and energy efficiency for AI workloads.

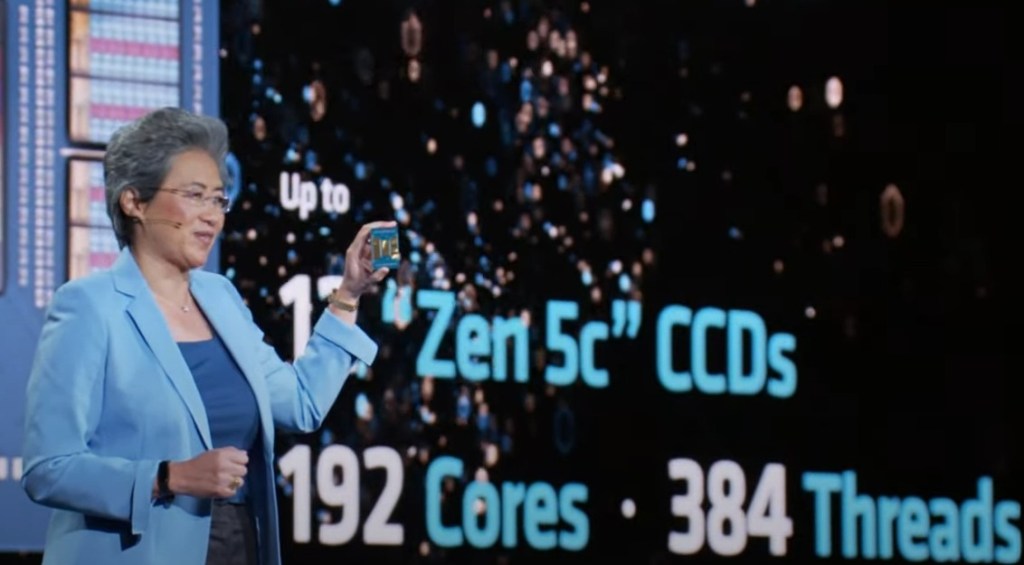

“AMD is driving AI innovation at an unprecedented pace, highlighted by the launch of our AMD Instinct MI350 series accelerators, advances in our next generation AMD Helios rack-scale solutions, and growing momentum for our ROCm open software stack,” said Dr Lisa Su, Chair and CEO of AMD.

Key Announcements

- Instinct MI350 series GPUs: AMD’s latest accelerators deliver a fourfold increase in AI compute and a 35x leap in inferencing performance over previous generations. The MI355X model offers up to 40 percent more tokens-per-dollar than competing solutions, directly targeting NVIDIA’s dominance in the AI chip market.

- Helios rack-scale platform: Previewed as the next-generation AI server, Helios will use the upcoming MI400 Series GPUs and Zen 6 CPUs to deliver up to 10x performance for inference on advanced models. The platform embraces open standards with networking and interconnects designed to be shared across the industry.

- ROCm 7 software and Developer Cloud: The latest ROCm release improves support for popular AI frameworks, expands hardware compatibility and introduces new tools to streamline AI development. AMD’s Developer Cloud now offers global access for rapid, high-performance AI experimentation and deployment.

- Energy efficiency: AMD surpassed its previous five-year goal with a 38x improvement in energy efficiency for AI training and HPC nodes, and set a new target to achieve a 20x increase in rack-scale energy efficiency by 2030. This could reduce the electricity needed to train large AI models by 95 percent by the end of the decade.

Broad Industry ecosystem

AMD’s event was marked by strong endorsements and technical partnerships with major AI players:

- Meta: Using AMD’s MI300X for Llama 3 and 4 inference, with plans to adopt MI350 and MI400 series for next-generation AI workloads.

- OpenAI: CEO Sam Altman confirmed the use of MI300X and deep collaboration on MI400 platforms for both research and GPT production models on Azure.

- Microsoft: Deploying Instinct MI300X for both proprietary and open-source models in Azure’s cloud.

- Oracle Cloud Infrastructure: Among the first to adopt the MI355X, planning zettascale clusters with up to 131,072 GPUs.

- Other partners: xAI, Cohere, Humain, Red Hat, Astera Labs, and Marvell are all integrating AMD’s AI technologies into their solutions.

Industry Impact

These developments signal a major escalation in the competition to power the future of AI. By pushing open standards, AMD is positioning itself as the champion of a collaborative, interoperable AI ecosystem.

This is somewhat similar to what NVIDIA Founder and CEO Jensen Huang shared in his keynote address at Computex 2025 in Taipei in May.

He announced then that NVIDIA would allow customers to deploy rival chips within data centres built around NVIDIA’s technology — a notable departure from the company’s proprietary approach.

The move acknowledges the growing trend of major cloud providers and technology companies, such as Microsoft and Amazon, developing their own in-house AI chips and seeking greater flexibility in their infrastructure choices.

AMD’s emphasis on open collaboration and energy efficiency is likely to accelerate innovation, lower barriers for new entrants, and foster a more diverse and resilient AI ecosystem.

“The future of AI is not going to be built by any one company or in a closed ecosystem. It’s going to be shaped by open collaboration across the industry,” said Su.