Qualcomm has unveiled its AI200 and AI250 chips for the AI data centre market, placing it in direct competition with established leaders such as NVIDIA and AMD.

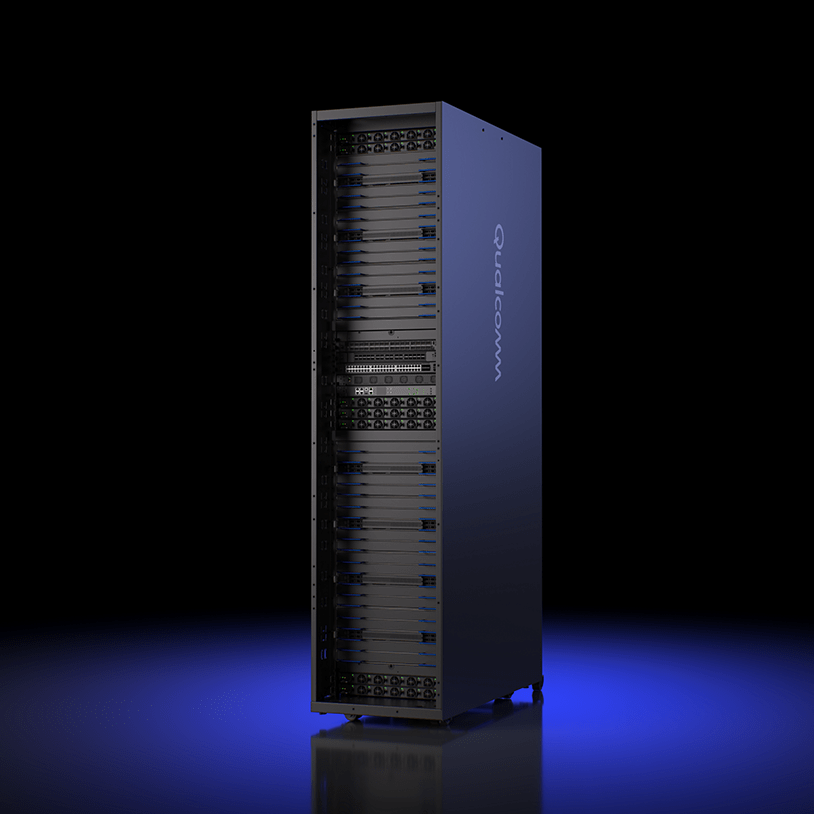

The new AI inference accelerators are designed to redefine rack-scale data centers by delivering unprecedented memory capacity, power efficiency and performance optimised specifically for generative AI and inference workloads.

Expected to be available in 2026, the AI200 chip offers 768GB of LPDDR memory per card to enable data centres to deploy very large AI models with minimal latency and excellent energy efficiency.

The AI250, targeted for release in 2027, introduces an innovative near-memory computing architecture that boosts memory bandwidth by more than 10x while cutting power consumption in half compared to traditional GPU-based systems.

Qualcomm’s approach focuses on inference rather than training workloads, differentiating itself from NVIDIA’s and AMD’s GPU-centric solutions through significant gains in cost-per-watt and total cost of ownership for AI inference.

By leveraging its experience in smartphone processor architectures, particularly the Hexagon neural processing unit (NPU), Qualcomm delivers higher performance-per-watt for AI tasks, which is critical for large-scale data centre operations.

Both chips come with robust features including direct liquid cooling, high scalability with PCIe and Ethernet connectivity, and power efficiency suitable for dense rack deployments up to 160kW per rack, comparable to NVIDIA’s high-end NVL72 systems.

“With Qualcomm AI200 and AI250, we’re redefining what’s possible for rack-scale AI inference. These innovative new AI infrastructure solutions empower customers to deploy generative AI at unprecedented TCO, while maintaining the flexibility and security modern data centers demand,” said Durga Malladi, SVP & GM of Technology Planning, Edge Solutions & Data Center at Qualcomm Technologies