AMD has reveals that the pioneering foundation model ZAYA1 from Zyphra was trained entirely on an AMD hardware platform.

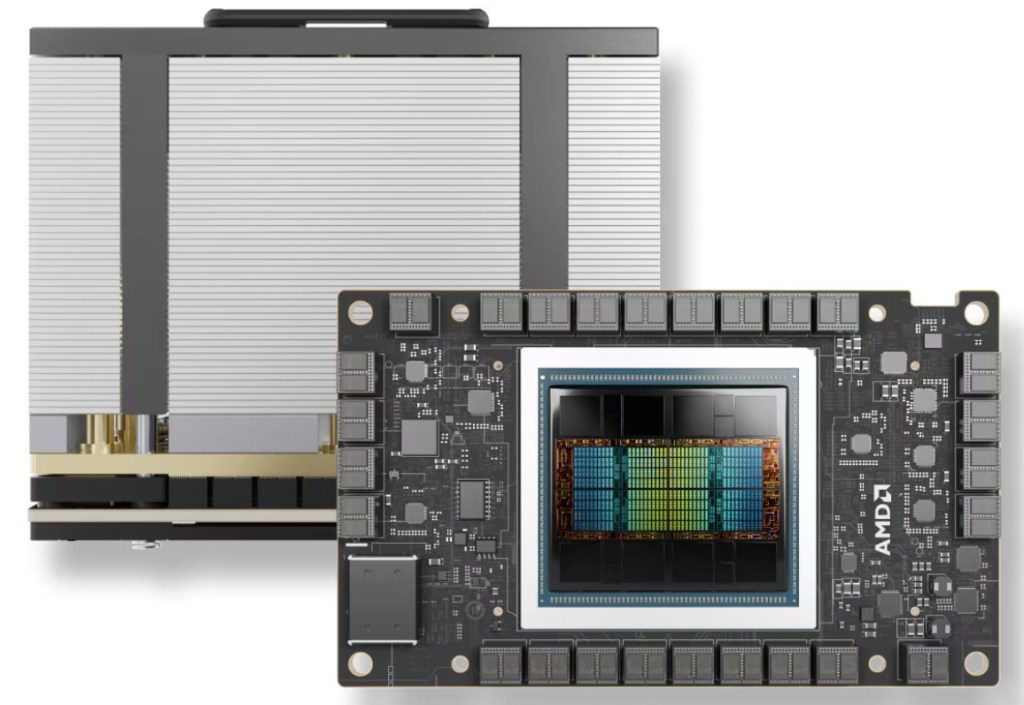

ZAYA1 is the first large-scale Mixture-of-Experts model to be trained exclusively using AMD Instinct MI300X GPUs, AMD Pensando networking and the ROCm open software stack.

Results published by Zyphra show that the ZAYA1-base model delivers performance competitive with or superior to leading open models, including outperforming Llama-3-8B and OLMoE, and rivaling Qwen3-4B and Gemma3-12B across core benchmarks for reasoning, mathematics and coding.

The success highlights the scalability and efficiency of AMD’s platform for production-scale AI. Specifically, the 192 GB of high-bandwidth memory on the AMD Instinct MI300X GPU was critical, allowing for efficient large-scale training that avoided complex sharding techniques. Zyphra also reported achieving more than 10 times faster model save times using AMD’s optimised distributed I/O.

“Our results highlight the power of co-designing model architectures with silicon and systems, and we’re excited to deepen our collaboration with AMD and IBM as we build the next generation of advanced multimodal foundation models,” said Krithik Puthalath, CEO of Zyphra.

“AMD leadership in accelerated computing is empowering innovators like Zyphra to push the boundaries of what’s possible in AI. This milestone showcases the power and flexibility of AMD Instinct GPUs and Pensando networking for training complex, large-scale models,” said Emad Barsoum, Corporate Vice President of AI and engineering at AMD’s Artificial Intelligence Group.