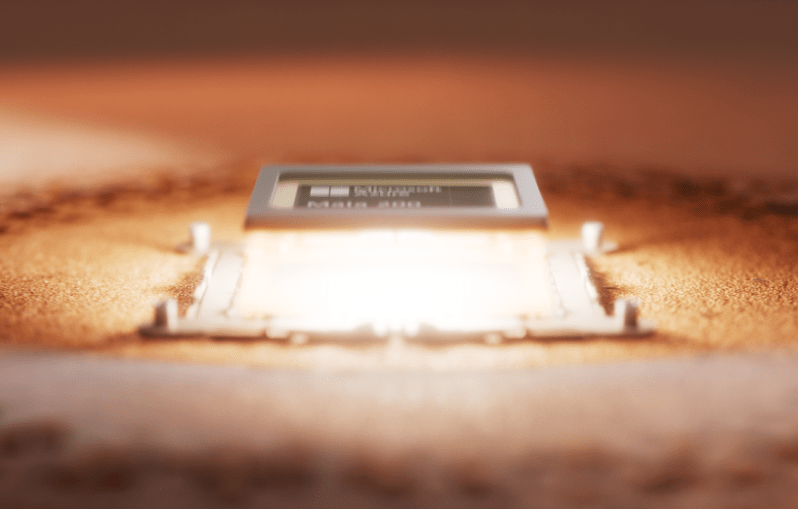

Microsoft has unveiled the Maia 200 AI inference accelerator that is claimed to deliver superior performance over key competitors in the hyperscale chip market.

Built on TSMC’s 3nm process with more than 140 billion transistors, the chip delivers more than 10 petaFLOPS in FP4 and five petaFLOPS in FP8 within a 750W TDP, paired with 216GB HBM3e memory at seven TB/s bandwidth.

Maia 200 features native FP8/FP4 tensor cores, 272MB on-chip SRAM, and specialised data movement engines for high model utilisation.

“Maia 200 is part of our heterogenous AI infrastructure and will serve multiple models, including the latest GPT-5.2 models from OpenAI, bringing performance per dollar advantage to Microsoft Foundry and Microsoft 365 Copilot,” wrote Scott Guthrie, Executive Vice President of Cloud and AI at Microsoft in a blog post.

The new chip is being deployed in Azure’s US Central data centre near Des Moines, Iowa, with US West 3 near Phoenix next.

Its introduction adds competition to the AI chip race with its 3x FP4 performance over Amazon’s third-gen Trainium and FP8 exceeding Google’s seventh-gen TPU, while delivering 30 percent better performance per dollar than Microsoft’s current fleet.

As a purpose-built inference accelerator, Maia 200 lacks training versatility compared to the NVIDIA B200 Blackwell, which excels in both training and inference.

However, the move does intensify hyperscaler competition and reduces reliance on NVIDIA amid rising inference demands, and pressures AWS and Google Cloud to accelerate custom silicon.