It’s no dinosaur but the newly-announced NVIDIA Titan RTX, dubbed T-Rex, is certainly very powerful — to the tune of 130 teraflops of deep learning performance and 11 GigaRays of ray-tracing performance.

It’s no dinosaur but the newly-announced NVIDIA Titan RTX, dubbed T-Rex, is certainly very powerful — to the tune of 130 teraflops of deep learning performance and 11 GigaRays of ray-tracing performance.

NVIDIA has introduced the GeForce GTX 750 Ti and GTX 750, the first GPUs based on the new Maxwell graphics architecture.

NVIDIA has introduced the GeForce GTX 750 Ti and GTX 750, the first GPUs based on the new Maxwell graphics architecture.

A GeForce GTX 750 Ti running at 1080p resolution doubles the performance and uses half the power of the GTX 550 Ti, which was built with the Fermi architecture, and is one of the most used NVIDIA GPUs, according to the Steam Hardware Survey.

“We know that to advance performance, we must advance performance per watt, because every system we design for has a power limit — from supercomputers to PCs to smartphones,” said Scott Herkelman, General Manager of the GeForce business unit at NVIDIA. “That’s why we architected Maxwell to be the most efficient GPU architecture ever built.”

Visitors to SIGGRAPH Asia last week were the first to see and catch the demo of NVIDIA GRID VCA in Asia. Essentially, NVIDIA GRID VCA is a powerful GPU-based system that runs complex applications such as Autodesk […]

Power is a big limitation in mobile devices despite improvements in battery power, according to Neil Trevett, Vice President Mobile Content of NVIDIA.

Power is a big limitation in mobile devices despite improvements in battery power, according to Neil Trevett, Vice President Mobile Content of NVIDIA.

“Power is the new performance limiter. Developers need to use acceleration to preserve battery life and high performance,” he said at NVIDIA Tech Talk at SIGGRAPH Asia in Hong Kong.

On the other hand, advanced mobile GPUs and new sensors are combining to make vision processing the next wave of mobile visual processing. NVIDIA is building a stack of advanced silicon, APIs and libraries to enable advanced mobile vision applications.

IBM and NVIDIA plan to collaborate on GPU-accelerated versions of IBM’s wide portfolio of enterprise software applications — taking GPU accelerator technology for the first time into the heart of enterprise-scale data centres.

IBM and NVIDIA plan to collaborate on GPU-accelerated versions of IBM’s wide portfolio of enterprise software applications — taking GPU accelerator technology for the first time into the heart of enterprise-scale data centres.

The collaboration aims to enable IBM customers to more rapidly process, secure and analyse massive volumes of streaming data.

“Harnessing GPU technology to IBM’s enterprise software platforms will bring advanced, in-memory processing to a wider variety of new application areas,” said Sean Poulley, Vice President of Databases and Data Warehousing at IBM. “We are looking at a new generation of higher-performance solutions to help data center customers overcome their most challenging computing problems.”

The NVIDIA Tesla K40 GPU accelerator is arguably the world’s highest performance accelerator ever built. It is capable of delivering extreme performance to a wide range of scientific, engineering, high performance computing (HPC), and enterprise applications.

The NVIDIA Tesla K40 GPU accelerator is arguably the world’s highest performance accelerator ever built. It is capable of delivering extreme performance to a wide range of scientific, engineering, high performance computing (HPC), and enterprise applications.

Providing double the memory and up to 40 percent higher performance than its predecessor, the Tesla K20X GPU accelerator, and 10 times higher performance than the fastest CPU, the Tesla K40 GPU is the world’s first and highest-performance accelerator optimised for big data analytics and large-scale scientific workloads.

Featuring intelligent NVIDIA GPU Boost technology, which converts power headroom into a user-controlled performance boost, the Tesla K40 GPU accelerator enables users to unlock the untapped performance of a broad range of applications.

AMD fired the first salvo after a long period of silence to reignite the GPU battle. But, NVIDIA has hit back immediately and regained the crown with the GeForce GTX 780 Ti. Besides sheer performance, NVIDIA’s armoury also includes G-SYNC, GeForce Experience and ShadowPlay.

AMD fired the first salvo after a long period of silence to reignite the GPU battle. But, NVIDIA has hit back immediately and regained the crown with the GeForce GTX 780 Ti. Besides sheer performance, NVIDIA’s armoury also includes G-SYNC, GeForce Experience and ShadowPlay.

The new card delivers smooth frame rates at extreme resolutions for the latest and hottest PC games, including Assassin’s Creed IV — Black Flag, Call of Duty: Ghosts and Batman: Arkham Origins. It also this with cool, quiet operation that is critical to providing an immersive gaming experience.

Powering the GPU is the NVIDIA Kepler architecture, which provides an advanced, low-thermal-density design that translates into better cooling, quieter acoustics and record-breaking performance.

Businesses across Australia can now deploy graphics-accelerated virtual desktops to their employees – cost-effectively, anywhere and on any device – with the adoption of NVIDIA GRID technology by leading technology partners.

Businesses across Australia can now deploy graphics-accelerated virtual desktops to their employees – cost-effectively, anywhere and on any device – with the adoption of NVIDIA GRID technology by leading technology partners.

Servers from Cisco, Dell, HP, IBM and others are now incorporating NVIDIA GRID into their desktop virtualisation solutions. Combined with enterprise virtualisation software from Citrix, Microsoft or VMware, these solutions can deliver GPU-accelerated applications and desktops to engineers, designers, architects, product design teams and special-effects artists throughout Australia.

Melbourne-based Xenon Systems was appointed as the first NVIDIA GRID Demo Centre for Australia and New Zealand earlier this year.

Visual computing is no longer just about gaming but has now permeated everyday lives, Dr Simon See, Director and Chief Solution Architect of NVIDIA, told a gathering of 450 start-ups, investors and R&D providers in digital media.

He pointed out that GPUs now help power the 3D web, location-based visualisation applications, creative content creation, computer vision, user interfaces, image recognition, HD video processing, and virtual worlds.

In his talk, Simon also discussed the vibrant ecosystem of developers that have adopted the CUDA GPU computing platform, how early stage companies can leverage the GPU for visual and other computing applications, and what global programmes NVIDIA offers to nurture and inspire innovation and business opportunities throughout these ecosystems.

NVIDIA has formed an alliance with Ubisoft to offer PC gamers the best gaming experiences possible for Ubisoft’s biggest fall titles, including Tom Clancy’s Splinter Cell Blacklist, Assassins Creed IV Black Flag and Watch Dogs.

NVIDIA has formed an alliance with Ubisoft to offer PC gamers the best gaming experiences possible for Ubisoft’s biggest fall titles, including Tom Clancy’s Splinter Cell Blacklist, Assassins Creed IV Black Flag and Watch Dogs.

NVIDIA’s developer technology team is working closely with Ubisoft’s development studios on incorporating graphics technology innovations to create game worlds that deliver new heights of realism and immersion. One example is NVIDIA® TXAA™ antialiasing, which provides Hollywood-levels of smooth animation, soft shadows, HBAO+ (horizon-based ambient occlusion) and advanced DX11 tessellation.

“PC gaming is stronger than ever and Ubisoft understands that PC gamers demand a truly elite experience — the best resolutions, the smoothest frame rates and the latest gaming breakthroughs,” said Tony Tamasi, Senior Vice President of Content and Technology at NVIDIA. “We’ve worked closely with Ubisoft’s incredibly talented creative team throughout the development process to incorporate our technologies and deliver the most immersive and visually spectacular game worlds imaginable.”

By Edward Lim, Managing Consultant, CIZA Concept

By Edward Lim, Managing Consultant, CIZA Concept

Founded in 1987, Rhythm & Hues Studios is a visual effects company with corporate headquarters in El Segundo, California and production facilities in India, Malaysia, Canada, and Taiwan.

The studio has won the Academy Award for Best Visual Effects for Babe (1995), The Golden Compass (2008) and Life of Pi (2013), on top of four Scientific and Technical Academy Awards from the Academy of Motion Picture Arts and Sciences (AMPAS).

Computing power and unified platform needed

Lots of computing power are needed to create computer graphics imagery (CGI) in films. Besides this requirement, Rhythm & Hues also needed to standardize on a platform that can be used in its studios around the world. This unified platform is critical as work is shared and done concurrently in different parts of the world.

NVIDIA has launched the NVIDIA GeoInt Accelerator, the world’s first GPU-accelerated geospatial intelligence platform to enable security analysts to find actionable insights quicker and more accurately than ever before from vast quantities of raw data, images and video.

NVIDIA has launched the NVIDIA GeoInt Accelerator, the world’s first GPU-accelerated geospatial intelligence platform to enable security analysts to find actionable insights quicker and more accurately than ever before from vast quantities of raw data, images and video.

The platform provides defence and homeland security analysts with tools that enable faster processing of high-resolution satellite imagery, facial recognition in surveillance video, combat mission planning using geographic information system (GIS) data, and object recognition in video collected by drones.

It offers a complete solution consisting of an NVIDIA Tesla GPU accelerated system, software applications for geospatial intelligence analysis, and advanced application development libraries.

Huawei is banking on the popularity of tablets among the younger generation with the launch of the Huawei MediaPad 7 Youth. Compact, sturdy and stylish with its aluminum metal unibody measuring just 9.9mm and weighing […]

By Edward Lim, Managing Consultant, CIZA Concept

By Edward Lim, Managing Consultant, CIZA Concept

Established in 2006 as a research institute at the National University of Singapore (NUS), the NUS Risk Management Institute (RMI) is dedicated to financial risk management. Its establishment was supported by the Monetary Authority of Singapore (MAS) under its program on Risk Management and Financial Innovation.

In 2009, RMI embarked on a non-profit Credit Research Initiative (CRI) in response to the financial crisis, with the intent to spur research and development in the critical area of credit rating. Besides being just a typical research project, it wanted to demonstrate the operational feasibility of its research and become a trusted source of credit information.

CRI currently covers more than 35,000 companies in 106 economies in Asia-Pacific, North America, Europe, Latin America, Africa, and the Middle East.

By Edward Lim, Managing Consultant, CIZA Concept Founded by Rich Ho in Singapore in 2004, Richmanclub Studios is a motion picture production company. Its first official production was the short film, “The Alien Invasion” in […]

NVIDIA Tesla GPU accelerators are powering the world’s two most energy efficient supercomputers, according to the latest Green500 list published last week.

NVIDIA Tesla GPU accelerators are powering the world’s two most energy efficient supercomputers, according to the latest Green500 list published last week.

The winning system is Eurora at CINECA, Italy’s largest supercomputing centre, in Casalecchio di Reno. Equipped with NVIDIA Kepler architecture-based GPU accelerators – the highest-performance, most efficient accelerators ever built – Eurora delivers 3,210 MFlops per watt, making it 2.6 times more energy efficient than the best system using Intel CPUs alone (at Météo France). It also greatly surpasses the most efficient Intel Xeon Phi accelerator-based system, Beacon, at the National Institute for Computational Sciences, at the University of Tennessee.

The number two system on the June 2013 Green500 list is the Aurora Tigon supercomputer at the Selex ES facilities Chieti, Italy.

NVIDIA has collaborated with a research team at Stanford University to create the world’s largest artificial neural network built to model how the human brain learns. The network is 6.5 times bigger than the previous record-setting network developed by Google in 2012.

NVIDIA has collaborated with a research team at Stanford University to create the world’s largest artificial neural network built to model how the human brain learns. The network is 6.5 times bigger than the previous record-setting network developed by Google in 2012.

Computer-based neural networks are capable of “learning” how to model the behaviour of the brain – including recognising objects, characters, voices, and audio in the same way that humans do.

Yet creating large-scale neural networks is extremely computationally expensive. For example, Google used approximately 1,000 CPU-based servers, or 16,000 CPU cores, to develop its neural network, which taught itself to recognise cats in a series of YouTube videos. The network included 1.7 billion parameters, the virtual representation of connections between neurons.

Just a week after launching its top-of-the-line GeForce GTX 780, NVIDIA has added a scaled-down sibling to its impressive lineup of GPUs based on the award-winning NVIDIA Kepler architecture.

Just a week after launching its top-of-the-line GeForce GTX 780, NVIDIA has added a scaled-down sibling to its impressive lineup of GPUs based on the award-winning NVIDIA Kepler architecture.

Priced at US$399, the new NVIDIA GeForce GTX 770 GPU joins the GTX TITAN and the GTX 780 in delivering superior gaming performance, advanced features, and silky smooth frame rates for unsurpassed high-definition PC gaming.

“Only NVIDIA GPUs consistently provide gamers with the ability to achieve extremely fast frame rates at high-definition resolutions with all of the eye candy turned on,” said Scott Herkelman, General Manager of the GeForce GPU business at NVIDIA. “The GeForce GTX 770 represents a new threshold of performance and features for under US$400 and our commitment to PC gaming is why GeForce GPUs continue to be the No. 1 choice of gamers worldwide.”

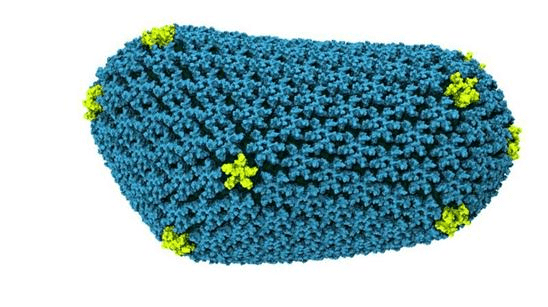

Researchers at the University of Illinois at Urbana-Champaign (UIUC) have achieved a major breakthrough in the battle to fight the spread of the human immunodeficiency virus (HIV) using NVIDIA Tesla GPU accelerators.

Researchers at the University of Illinois at Urbana-Champaign (UIUC) have achieved a major breakthrough in the battle to fight the spread of the human immunodeficiency virus (HIV) using NVIDIA Tesla GPU accelerators.

Featured on the cover of the latest issue of Nature, the world’s most-cited interdisciplinary science journal, a new paper details how UIUC researchers collaborating with researchers at the University of Pittsburgh School of Medicine have, for the first time, determined the precise chemical structure of the HIV “capsid,” a protein shell that protects the virus’s genetic material and is a key to its virulence. Understanding this structure may hold the key to the development of new and more effective antiretroviral drugs to combat a virus that has killed an estimated 25 million people and infected 34 million more.

UIUC researchers uncovered detail about the capsid structure by running the first all-atom simulation of HIV on the Blue Waters Supercomputer. Powered by 3,000 NVIDIA Tesla K20X GPU accelerators – the highest performance, most efficient accelerators ever built – the Cray XK7 supercomputer gave researchers the computational performance to run the largest simulation ever published, involving 64 million atoms.

Just three months after it introduced the NVIDIA GeForce GTX TITAN, NVIDIA has rolled out another top of the line graphics card. The NVIDIA GeForce GTX 780 GPU is designed to deliver great performance for […]

NVIDIA has unleashed the full graphics potential of enterprise desktop virtualisation with the availability of NVIDIA GRID vGPU integrated into Citrix XenDesktop 7.

NVIDIA has unleashed the full graphics potential of enterprise desktop virtualisation with the availability of NVIDIA GRID vGPU integrated into Citrix XenDesktop 7.

NVIDIA GRID vGPU technology addresses a challenge that has grown in recent years with the rise of employees using their own notebooks and portable devices for work. These workers have increasingly relied on desktop virtualisation technologies for anytime access to computing resources, but until now this was generally used for the more standard enterprise applications. Performance and compatibility constraints had made it difficult for applications such as building information management (BIM), product-lifecycle management (PLM) and video-photo editing.

Two decades ago, hardware-based graphics replaced software emulation. Desktop virtualisation solutions stood alone as the only modern computing form without dedicated graphics hardware. As a result, an already busy virtualised CPU limited performance and software emulation hampered application compatibility.

What’s the sweet spot in pricing for gamers looking for a graphics card to play this year’s hottest games? While those who are more financially endowed will go for the highest end cards, such as the NVIDIA GeForce GTX 680 or 690, NVIDIA believes that most mainstream gamers are prepared to fork out around US$169.

What’s the sweet spot in pricing for gamers looking for a graphics card to play this year’s hottest games? While those who are more financially endowed will go for the highest end cards, such as the NVIDIA GeForce GTX 680 or 690, NVIDIA believes that most mainstream gamers are prepared to fork out around US$169.

Putting this belief into action, the company has just launched the NVIDIA GeForce GTX 650 Ti BOOST GPU, which is based on the NVIDIA Kepler architecture and equipped with 768 NVIDIA CUDA cores. This new product is available in two flavours – the 2GB version for US$169 and the 1GB configuration for US$149.

This introduction has led to a revision of pricing for other NVIDIA cards with the entry level

Acer has expanded its commercial offering with the Veriton P series and new Altos servers, which support a wide range of computing configurations and feature the Intel Xeon E3 and E5 processors, ECC memory, RAID support, and backup components to maximise the performance and run time.

Acer has expanded its commercial offering with the Veriton P series and new Altos servers, which support a wide range of computing configurations and feature the Intel Xeon E3 and E5 processors, ECC memory, RAID support, and backup components to maximise the performance and run time.

“With a full range of high-performance products and a flexible operating strategy in place our new workstation and server lineup promises immediate benefits for the most demanding clients. We will be able to reach out to many more customers in the SMB, Enterprise, Education and Government sectors,” said Walter Deppeler, Senior Corporate VP and Head of Commercial Business at Acer.

The new Veriton P Series Workstations provide robust computing and rendering for the individual user. The Veriton P series targets customers ranging from small offices to large scale corporations and public organisations and pack NVIDIA’s professional 3D and 2D graphics cards with support across the Intel range of Xeon processors.

NVIDIA has introduced the industry’s first visual computing appliance that enables businesses to deliver ultra-fast GPU performance to any Windows, Linux or Mac client on their network.

The NVIDIA GRID Visual Computing Appliance (VCA) is a powerful GPU-based system that runs complex applications such as those from Adobe Systems, Autodesk and Dassault Systèmes, and sends their graphics output over the network to be displayed on a client computer. This remote GPU acceleration gives users the same rich graphics experience they would get from an expensive, dedicated PC under their desk.

NVIDIA GRID VCA provides enormous flexibility to small and medium-size businesses with limited IT infrastructures. Their employees can, through the simple click of an icon, create a virtual machine called a workspace. These workspaces – which are, effectively, dedicated, high-performance GPU-based systems – can be added, deleted or reallocated as needed.

Think graphics processing unit (GPU) and most would relate it to mainly gaming. But enterprises are finding new ways to leverage the power of GPUs to tackle big data analytics and advanced search for both consumer and commercial applications.

Shazam, Salesforce.com and Cortexica are among those expanding the use of GPUs beyond their traditional role of processing massive data sets and complex algorithms for high performance computing science and engineering applications. They rely on NVIDIA Tesla GPU accelerators for areas as diverse as audio search, big data analytics and image recognition.

“GPU accelerators provide great value to applications with lots of data or computations,” said Sumit Gupta, General Manager of the Tesla accelerated computing business at NVIDIA. “A growing number of applications that provide mobile service and social media analysis have both. And that’s prompting their providers to turn to GPU accelerators as they scale up their infrastructure to meet growing demand.”

Jen-Hsun Huang, NVIDIA’s co-founder and chief executive officer, will deliver the opening keynote address at the 2013 GPU Technology Conference (GTC) on Tuesday, March 19, beginning at 9 am PT. Huang will discuss the profound […]

A new range of NVIDIA Quadro professional graphics products offers unprecedented workstation performance and capabilities for professionals in manufacturing, engineering, medical, architectural, and media and entertainment companies.

A new range of NVIDIA Quadro professional graphics products offers unprecedented workstation performance and capabilities for professionals in manufacturing, engineering, medical, architectural, and media and entertainment companies.

Built on the ultra-efficient processing power of the NVIDIA Kepler architecture – the world’s fastest, most efficient GPU architecture – the new lineup includes:

A world-class line-up of keynote speakers will be present at the 4th GPU Technology Conference (GTC), which will be held at the McEnery Convention Center in San Jose, California, from March 18 to 21.

NVIDIA CEO and co-founder Jen-Hsun Huang will discuss the profound and growing impact of GPU technology in gaming, science, industry, media and entertainment, design and other fields in an opening keynote address on Tuesday, March 19 at 9 a.m. PT.

On Wednesday, March 20, Erez Lieberman Aiden (right), a pioneering genomics researcher, will discuss his work sequencing the human genome in 3D, which allows scientists to gain deep insights into gene behavior and fundamental biological processes of life. Aiden will reveal how his team harnesses GPUs to accelerate the analysis of massive amounts of genomic information and simulate the physical process of genome folding, uncovering insights into gene expression that can now be used by thousands of researchers.

On Wednesday, March 20, Erez Lieberman Aiden (right), a pioneering genomics researcher, will discuss his work sequencing the human genome in 3D, which allows scientists to gain deep insights into gene behavior and fundamental biological processes of life. Aiden will reveal how his team harnesses GPUs to accelerate the analysis of massive amounts of genomic information and simulate the physical process of genome folding, uncovering insights into gene expression that can now be used by thousands of researchers.

GeForce GTX TITAN is built with the same NVIDIA Kepler architecture that powers Oak Ridge National Laboratory’s newly launched Titan supercomputer, which tops the list of the Top 500 supercomputers in the world.

By harnessing the power of three GeForce GTX TITAN GPUs simultaneously in three-way SLI mode, gamers can max out every visual setting without fear of a meltdown while playing any of the most demanding PC gaming titles.

NVIDIA has reported revenue for fiscal 2013 ended January 27, 2013, of a record $4.28 billion, up 7.1 percent from $4.00 billion in fiscal 2012.

NVIDIA has reported revenue for fiscal 2013 ended January 27, 2013, of a record $4.28 billion, up 7.1 percent from $4.00 billion in fiscal 2012.

GAAP earnings per share for the year were $0.90 per diluted share, a decrease of 4.3 percent from $0.94 in fiscal 2012. Non-GAAP earnings per diluted share were $1.17, down 1.7 percent from $1.19 in fiscal 2012. During the quarter, NVIDIA repurchased $100.0 million of stock and paid a dividend of $0.075 per share, equivalent to $46.9 million.

“This year we did the best work in our company’s history,” said Jen-Hsun Huang, president and chief executive officer of NVIDIA. “We achieved record revenues, margins and cash, despite significant market headwinds.

NVIDIA has announced Project SHIELD, a gaming portable for open platforms, designed for gamers who yearn to play when, where and how they want.

Created with the philosophy that gaming should be open and flexible, Project SHIELD flawlessly plays both Android and PC titles. As a pure Android device, it gives access to any game on Google Play. And as a wireless receiver and controller, it can stream games from a PC powered by NVIDIA® GeForce GTX GPUs, accessing titles on its STEAM game library from anywhere in the home.

“Project SHIELD was created by NVIDIA engineers who love to game and imagined a new way to play,” said Jen-Hsun Huang, co-founder and chief executive officer at NVIDIA. “We were inspired by a vision that the rise of mobile and cloud technologies will free us from our boxes, letting us game anywhere, on any screen. We imagined a device that would do for games what the iPod and Kindle have done for music and books, letting us play in a cool new way. We hope other gamers love SHIELD as much as we do.”

Project SHIELD combines the advanced processing power of NVIDIA Tegra 4, breakthrough game-speed Wi-Fi technology and stunning HD video and audio built into a console-grade controller. It can be used to play on its own integrated screen or on a big screen, and on the couch or on the go.

NVIDIA has introduced NVIDIA Tegra 4 , the world’s fastest mobile processor, with record-setting performance and battery life to flawlessly power smartphones and tablets, gaming devices, auto infotainment and navigation systems, and PCs.

Tegra 4 offers exceptional graphics processing, with lightning-fast web browsing, stunning visuals and new camera capabilities through computational photography.

Previously codenamed “Wayne,” Tegra 4 features 72 custom NVIDIA GeForce GPU cores – or six times the GPU horsepower of Tegra 3 – which deliver more realistic gaming experiences and higher resolution displays. It includes the first quad-core application of ARM’s most advanced CPU core, the Cortex-A15, which delivers 2.6x faster web browsing and breakthrough performance for apps.

Tegra 4 also enables worldwide 4G LTE voice and data support through an optional chipset, the fifth-generation NVIDIA Icera® i500 processor. More efficient and 40 percent the size of conventional modems, i500 delivers four times the processing capability of its predecessor.

Increasing power of GPUs is enabling designers to create 3D models quicker and in greater detail. Such additional details may include specific models of cars, cameras or other products instead of just generic models. This should pave the way towards democratisation of 3D.

However, a major barrier can halt this progress.

While creating a specific product is now easier, using that created model may be a challenge. Standing in the way are copyright laws and more importantly, copyright owners.

“Copyright owners own the right to models of their products. They can decide whether users can use or should remove 3D models of their products. Users need to seek permission to use such models,” said Gavin Greenwalt, Senior Artist of Straightface Studios.

The Knronos Group has announced the ratification and public release of an update to the OpenCL 1.2 specification, the open, royalty-free standard for cross-platform, parallel programming of modern processors. This backwards compatible version updates the core OpenCL 1.2 specification with bug fixes and clarifications and defines additional optional extensions for enhanced performance, functionality and robustness for parallel programming on a wide variety of platforms. Optional extensions are not required to be supported by a conformant OpenCL implementation, but are expected to be widely available; they define functionality that is likely to move into the required feature set in a future revision of the OpenCL specification. The updated OpenCL 1.2 specifications, together with online reference pages and reference cards, are available at www.khronos.org/opencl/.

The Knronos Group has announced the ratification and public release of an update to the OpenCL 1.2 specification, the open, royalty-free standard for cross-platform, parallel programming of modern processors. This backwards compatible version updates the core OpenCL 1.2 specification with bug fixes and clarifications and defines additional optional extensions for enhanced performance, functionality and robustness for parallel programming on a wide variety of platforms. Optional extensions are not required to be supported by a conformant OpenCL implementation, but are expected to be widely available; they define functionality that is likely to move into the required feature set in a future revision of the OpenCL specification. The updated OpenCL 1.2 specifications, together with online reference pages and reference cards, are available at www.khronos.org/opencl/.

“The OpenCL working group continues to listen closely to the demands of the developer community, and this update provides a timely increase in functionality and reliability of code ported across vendor implementations,” said Neil Trevett, chair of the OpenCL working group, president of the Khronos Group and vice president of mobile content at NVIDIA. “The new extensions enable early access to functionality for key use cases, including security capabilities for implementations of WebCL that enable access to OpenCL within a browser.”

Seventy more widely used applications have added support for GPU acceleration so far this year, bringing the total number available to researchers, engineers and designers to more than 200, according to NVIDIA. Three of the newest applications to […]