Daimler and Bosch have chosen NVIDIA Drive Pegasus AI supercomputer to power their robotaxis, which are expected to start testing in Silicon Valley next year.

Daimler and Bosch have chosen NVIDIA Drive Pegasus AI supercomputer to power their robotaxis, which are expected to start testing in Silicon Valley next year.

Category: Supercomputer

Intel tweet that launches a new GPU war

On June 13, Intel had the GPU world in a flurry when it tweeted “Intel’s first GPU coming in 2020”. The media were quick to post stories of this incoming new GPU, which would add interesting competition to a market dominated by NVIDIA with AMD a distant second.

On June 13, Intel had the GPU world in a flurry when it tweeted “Intel’s first GPU coming in 2020”. The media were quick to post stories of this incoming new GPU, which would add interesting competition to a market dominated by NVIDIA with AMD a distant second.

The US recaptures world’s fastest supercomputer title with first exaop AI system

The United States has regained its pole position at the fastest supercomputer race with the aptly named Summit.

Taiwan’s MOST and NVIDIA to collaborate on AI efforts

Taiwan is going big on artificial intelligence (AI) and its Ministry of Science and Technology (MOST) will be collaborating with NVIDIA on AI initiatives.

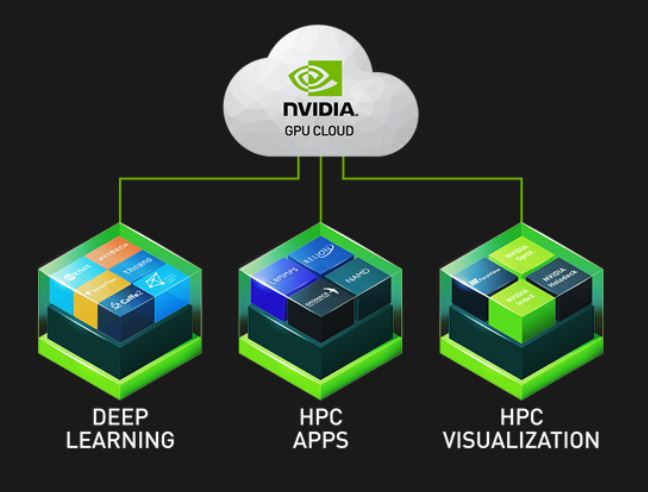

NVIDIA unveils first unified AI and HPC platform

NVIDIA has introduced the NVIDIA HGX-2, the first unified computing platform for both artificial intelligence (AI) and high performance computing (HPC).

NVIDIA has introduced the NVIDIA HGX-2, the first unified computing platform for both artificial intelligence (AI) and high performance computing (HPC).

ICT spending in APAC (sans Japan) to hit US$1.5t in 2021

Information and communications technology (ICT) spending in Asia/Pacific (excluding Japan) will hit US$1.5 trillion in 2021, according to IDC.

Panasonic powers facial recognition solution with NVIDIA deep learning technologies

Security is a major concern in airports, government buildings and major infrastructures around the world. Governments need to be able to quickly identify potential threats among the many people that enter and exit their countries daily. An effective facial recognition system is critical in safeguarding the country and critical infrastructures.

Security is a major concern in airports, government buildings and major infrastructures around the world. Governments need to be able to quickly identify potential threats among the many people that enter and exit their countries daily. An effective facial recognition system is critical in safeguarding the country and critical infrastructures.

DEEPCORE teams up with NVIDIA to boost AI startups

Tokyo-based startup incubator DEEPCORE is partnering NVIDIA to support AI startups and promote university research programmes across Japan.

Tokyo-based startup incubator DEEPCORE is partnering NVIDIA to support AI startups and promote university research programmes across Japan.

NVIDIA DGX-2: Insanely powerful

NVIDIA CEO Jensen Huang (above) dubbed it the “world’s biggest GPU”. And he certainly wasn’t kidding as the NVIDIA DGX-2 is a massive 350-pounder that delivers an amazing two petaflops of computational power.

GTC hits new highs

The GPU Technology Conference (GTC) has hit new highs with a record of more than 8,000 participants, and filling the entire San Jose McEnery Convention Center.

Emtech Asia: AI, quantum computing and more

Marina Bay Sands Expo and Convention Centre was a hive of activities of a different sort as more than 700 technologists from 21 countries converged for EmTech Asia on January 30 and 31.

Mindset change needed for an AI future

Think artificial intelligence (AI) and the advent of powerful thinking machines and images of Arnold Schwarzenegger of The Terminator come to mind.

Think artificial intelligence (AI) and the advent of powerful thinking machines and images of Arnold Schwarzenegger of The Terminator come to mind.

NVIDIA Titan V: Not for gamers

It’s easy to understand why the media and gamers were getting all excited following NVIDIA’s announcement of the Titan V. After all, it’s dubbed as “the world’s most powerful GPU for the PC, driven by the world’s most advanced GPU architecture, NVIDIA Volta”.

Singapore’s AI agenda gets double boost!

Singapore’s aim to be an artificial intelligence (AI) hub has been boosted with two initiatives — the setting up of a shared AI platform for researchers and the awarding of scholarships to develop AI talents.

At the NVIDIA AI Conference in Singapore yesterday, NVIDIA and Singapore’s National Supercomputing Centre (NSCC) agreed to establish a platform to bolster AI capabilities among its academic, research and industry stakeholders and in support of AI Singapore (AISG), a national programme set up in May to drive AI adoption, research and innovation in Singapore.

Called AI.Platform@NSCC, it will provide AI training, technical expertise and computing services to AISG, which brings together all Singapore-based research and tertiary institutions, including the National University of Singapore (NUS), Nanyang Technological University (NTU), Singapore University of Design and Technology (SUTD), Singapore Management University (SMU), as well as research institutions in the Agency for Science, Technology and Research (A*STAR).

Tantalising line-up of speakers at NVIDIA AI Conference

More than 1,000 participants attending the NVIDIA AI Conference in Singapore next week are in for a treat as the organisers are bringing in a tantalising line-up of speakers.

More than 1,000 participants attending the NVIDIA AI Conference in Singapore next week are in for a treat as the organisers are bringing in a tantalising line-up of speakers.

The two keynote speakers are Dr David B Kirk, NVIDIA Fellow and inventor of more than 60 patents and patent applications relating to graphics design; and Dr Wanli Min, AI scientist of Alibaba Cloud, who will touch on A Revolutionary Road to Data Intelligence.

Besides these two, there are special guest-of-honour Chng Kai Fong, Managing Director of Singapore’s Economic Development Board, and a panel discussion on AI for the Future of Singapore Economy.

NVIDIA to hold first AI-focused conference in Singapore in October

With artificial intelligence (AI) being a hot topic this year, NVIDIA is organising its first AI-focused regional conference in Singapore on October 23 and 24.

With artificial intelligence (AI) being a hot topic this year, NVIDIA is organising its first AI-focused regional conference in Singapore on October 23 and 24.

The event will be held in two parts with the first day focusing on Deep Learning Institute (DLI) workshop where participants will received hands-on training on deep learningl and the second day filled with keynote addresses, panel discussion and three tracks. It is targeted at data scientists and senior decision makers in the field of AI in both public and private sectors.

“Singapore is aiming to be the world’s first smart nation and AI is playing a critical role. NVIDIA is well positioned to help drive the government’s Smart Nation initiative with the development of solutions based on AI. Our GPUs are making headlines across the world by enabling many breakthroughs in various industries using deep learning,” said Raymond Teh, Vice President of APAC sales and marketing at NVIDIA.

ICML: Gathering of the brightest in AI

“I’m amazed at the quality of the papers presented. The project teams’ line of thinking and breakthrough concepts are refreshing,” exclaimed a leading artificial intelligence (AI) scientist at the International Conference on Machine Learning (ICML) in Sydney.

“I’m amazed at the quality of the papers presented. The project teams’ line of thinking and breakthrough concepts are refreshing,” exclaimed a leading artificial intelligence (AI) scientist at the International Conference on Machine Learning (ICML) in Sydney.

International Convention Centre Sydney was a massive hive of activities as 3,000 of the world’s top researchers, developers and students in AI gathered for ICML. The participants moved rapidly from one workshop to another and took great interest in the exhibition booths of top deep learning proponents such as NVIDIA, Google and Facebook.

With so many bright young talents. the event proved to be a good fishing ground for vendors as they held recruitment interviews at their booths, as well as posted openings on the board.

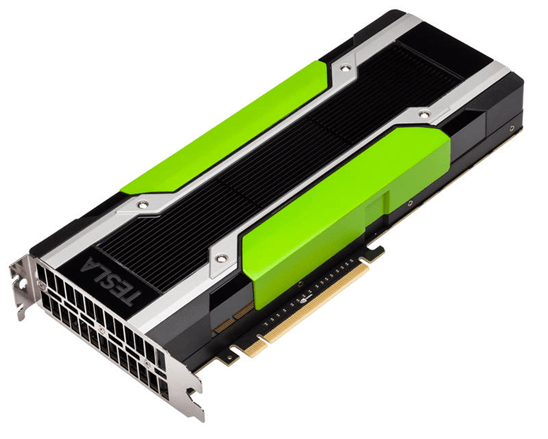

NVIDIA Tesla V100 surprise for world’s top AI researchers

Fifteen top AI research institutions of the NVIDIA AI Labs programmes were each presented with the Volta-based NVIDIA Tesla V100 GPU accelerator.

They were participating in Computer Vision and Pattern Recognition (CVPR) conference in Honolulu.

“AI is the most powerful technology force that we have ever known. I’ve seen everything. I’ve seen the coming and going of the client-server revolution. I’ve seen the coming and going of the PC revolution. Absolutely nothing compares,” said Jensen Huang, CEO of NVIDIA.

AI takes centrestage at ICML in Sydney

NVIDIA is bringing its wealth of artificial intelligence (AI) solutions and expertise to the International Conference on Machine Learning (ICML) in Sydney.

NVIDIA is bringing its wealth of artificial intelligence (AI) solutions and expertise to the International Conference on Machine Learning (ICML) in Sydney.

Held at Sydney International Convention Centre from August 6 to 11, the event is expected to attract up to 3,000 participants, primarily faculty, researchers and PhD students in machine learning, data science, data mining, AI, statistics, and related fields.

The NVIDIA booth (Level 2, The Gallery, Booth #4) will feature many firsts in Australia, such as demos on 4K style transfer, a deep neural network to extract a specific artistic style from a source painting, and then synthesises this information with the content of a separate video; self-driving auto using the Drive PX2 AI car computing platform; Deepstream SDK that simplifies development of high performance video analytics applications powered by deep learning; and NVIDIA Isaac, the AI-based software platform lets developers train virtual robots using detailed and highly realistic test scenarios.

NVIDIA and Baidu team up on AI

NVIDIA and Baidu have teamed up to bring artificial intelligence (AI) technology to cloud computing, self-driving vehicles and AI home assistants.

NVIDIA and Baidu have teamed up to bring artificial intelligence (AI) technology to cloud computing, self-driving vehicles and AI home assistants.

Baidu will deploy NVIDIA HGX architecture with Tesla Volta V100 and Tesla P4 GPU accelerators for AI training and inference in its data centres. Combined with Baidu’s PaddlePaddle deep learning framework and NVIDIA’s TensorRT deep learning inference software, researchers and companies can harness state-of-the-art technology to develop products and services with real-time understanding of images, speech, text and video.

To accelerate AI development, the companies will work together to optimise Baidu’s open-source PaddlePaddle deep learning framework on NVIDIA’s Volta GPU architecture.

NVIDIA receives DOE funding for HPC research

NVIDIA is among six technology companies to receive a total of US$258 funding from the US Department of Energy’s Exascale Computing Project (ECP).

NVIDIA is among six technology companies to receive a total of US$258 funding from the US Department of Energy’s Exascale Computing Project (ECP).

The funding is to accelerate the development of next-generation supercomputers with the delivery of at least two exascale computing systems, one of which is targeted by 2021.

Such systems would be about 50 times more powerful than the US’ fastest supercomputer, Titan, located at Oak Ridge National Laboratory.

Taiwan: Home of GeForce!

At the keynote of NVIDIA AI Forum, NVIDIA CEO and Founder Jensen Huang called “Taiwan is the home of NVIDIA’s GeForce system”.

At the keynote of NVIDIA AI Forum, NVIDIA CEO and Founder Jensen Huang called “Taiwan is the home of NVIDIA’s GeForce system”.

Video gaming is a US$100 billion industry and “GeForce PC gaming is the number one platform, nearly 200 million GeForce installed base,” declared Huang.

He announced the new NVIDIA Max-Q platform which lets gaming notebook makers produce faster, slimmer and quieter machines.

Voila, Volta!

NVIDIA has pulled yet another trick out of its always-filled hat of technology goodies with the launch of Volta, the world’s most powerful GPU computing architecture. At his keynote address at GTC in San Jose, NVIDIA CEO Jensen Huang dubbed it “the next level of computer projects”.

Volta is created to drive the next wave of advancement in artificial intelligence (AI) and high performance computing.

The first Volta-based processor is the NVIDIA Tesla V100 data centre GPU, which brings extraordinary speed and scalability for AI inferencing and training, as well as for accelerating HPC and graphics workloads.

Rise of accelerated computing in data centres

Can’t say this was unexpected as NVIDIA retorts Google’s claim that its custom ASIC Tensor Processing Unit (TPU) was up to 30 times faster than CPUs and NVIDIA’s K80 G for inferencing workloads.

Can’t say this was unexpected as NVIDIA retorts Google’s claim that its custom ASIC Tensor Processing Unit (TPU) was up to 30 times faster than CPUs and NVIDIA’s K80 G for inferencing workloads.

NVIDIA pointed out that Google’s TPU paper has drawn a clear conclusion – without accelerated computing, the scale-out of AI is simply not practical.

The role of data centres has changed considerably in today’s economy. Instead of just serving web pages, advertising and video content, data centres are now recognising voices, detecting images in video streams and connecting users with information they need when they need it.

Singapore universities deploy deep learning supercomputers

First, it was Singapore Management University (SMU). Now two other Singapore universities — Singapore University of Technology and Design (SUTD) and Nanyang Technological University (NTU) — have also deployed the NVIDIA DGX-1 deep learning supercomputer for their research projects on artificial intelligence (AI).

SUTD will use the DGX-1 at the SUTD Brain Lab to further research into machine reasoning and distributed learning. Under a memorandum of understanding signed earlier this month, NVIDIA and SUTD will also set up the NVIDIA-SUTD AI Lab to leverage the power of GPU-accelerated neural networks for researching new theories and algorithms for AI. The agreement also provides for internship opportunities to selected students of the lab.

“Computational power is a game changer for AI research, especially in the areas of big data analytics, robotics, machine reasoning and distributed intelligence. The DGX-1 will enable us to perform significantly more experiments in the same period of time, quickening the discovery of new theories and the design of new applications,” said Professors Shaowei Lin and Georgios Piliouras, Engineering Systems and Design, SUTD.

RIKEN turns to NVIDIA supercomputer for deep learning research

RIKEN, Japan’s largest comprehensive research institution, will have a new supercomputer for deep learning research in April. Built by Fujitsu using 24 NVIDIA DGX-1 AI systems, the new machine will accelerate the application of artificial intelligence (AI) to […]

Gunning for supercomputing supremacy in Japan

Tokyo Institute of Technology plans to create Japan’s fastest AI supercomputer, which is will deliver more than twice the performance of its predecessor to slide into the world’s top 10 fastest systems.

Called Tsubame 3.0, it will use Pascal-based NVIDIA P100 GPUs that are nearly three times as efficient as their predecessors, to reach an expected 12.2 petaflops of double precision performance.

Tsubame 3.0 will excel in AI computation with more than 47 PFLOPS of AI horsepower. When operated with Tsubame 2.5, it is expected to deliver 64.3 PFLOPS, making it Japan’s highest performing AI supercomputer.

SMU uses NVIDIA DGX-1 supercomputer for food recognition project

Singapore is renowned as a food paradise. And with so many mouth-watering dishes to pick from, sometimes even locals have difficulty identifying a specific dish.

Singapore is renowned as a food paradise. And with so many mouth-watering dishes to pick from, sometimes even locals have difficulty identifying a specific dish.

Singapore Management University (SMU) is working on a food artificial intelligence (AI) application that is calling on a supercomputer to help with recognising the local dishes to achieve smart food consumption and healthy lifestyle.

The project, developed as part of Singapore’s Smart Nation initiative, requires the analysis of a large number of food photos.

NVIDIA DGX SATURNV ranked most efficient supercomputer

NVIDIA’s new DGX SATURNV supercomputer is ranked the world’s most efficient — and 28th fastest overall — on the latest Top500 list of supercomputers.

NVIDIA’s new DGX SATURNV supercomputer is ranked the world’s most efficient — and 28th fastest overall — on the latest Top500 list of supercomputers.

Powered by new Tesla P100 GPUs, it delivers 9.46 gigaflops/watt — a 42 percent improvement from the 6.67 gigaflops/watt delivered by the most efficient machine on the Top500 list released last June.

Compared with a supercomputer of similar performance, the Camphore 2 system, which is powered by Xeon Phi Knights Landing, SATURNV is 2.3x more energy efficient.hat efficiency is key to building machines capable of reaching exascale speeds — that’s 1 quintillion, or 1 billion billion, floating-point operations per second. Such a machine could help design efficient new combustion engines, model clean-burning fusion reactors, and achieve new breakthroughs in medical research.

China’s world’s fastest supercomputer built without US chips

China has continued its lead in the race for the world’s fastest supercomputer with the Sunway TaihuLight, whose Linpack mark of 93 petaflops outperforms the former TOP500 champ, Tianhe-2, by a factor of three. What’s more remarkable is […]

NVIDIA unveils world’s first deep learning supercomputer

At his opening keynote address at GTC in San Jose, Jen-Hsun Huang, CEO of NVIDIA made a slew of announcements, including the world’s first deep learning supercomputer to meet the unlimited computing demands of artificial intelligence (AI).

As the first system designed specifically for deep learning, the NVIDIA DGX-1 comes fully integrated with hardware, deep learning software and development tools for quick, easy deployment. It is a turnkey system that contains a new generation of GPU accelerators, delivering the equivalent throughput of 250 x86 servers.

The DGX-1 deep learning system enables researchers and data scientists to easily harness the power of GPU-accelerated computing to create a new class of intelligent machines that learn, see and perceive the world as humans do. It delivers unprecedented levels of computing power to drive next-generation AI applications, allowing researchers to dramatically reduce the time to train larger, more sophisticated deep neural networks.

Monash University launches M3 to accelerate research

Monash University is taking research to another level with the launch of M3, the third-generation supercomputer available through the MASSIVE (Multi-modal Australian ScienceS Imaging and Visualisation Environment) facility.

Powered by ultra-high-performance NVIDIA Tesla K80 GPU accelerators, M3 will provide new simulation and real-time data processing capabilities to a wide selection of Australian researchers.

“Our collaboration with NVIDIA will take Monash research to new heights. By coupling some of Australia’s best researchers with NVIDIA’s accelerated computing technology we’re going to see some incredible impact. Our scientists will produce code that runs faster, but more significantly, their focus on deep learning algorithms will produce outcomes that are smarter,” said Professor Ian Smith, Vice Provost (Research and Research Infrastructure), Monash University.

NVIDIA adds AI and supercomputing prowess to driverless cars

The new NVIDIA DRIVE PX 2 is set to give driverless cars a major boost.

The new NVIDIA DRIVE PX 2 is set to give driverless cars a major boost.

Touted at the world’s most powerful engine for in-vehicle artificial intelligence, it allows the automotive industry to use artificial intelligence (AI) to tackle the complexities inherent in autonomous driving. NVIDIA DRIVE PX2 utilises deep learning on NVIDIA’s advanced GPUs for 360-degree situational awareness around the car, to determine precisely where the car is and to compute a safe, comfortable trajectory.

“Drivers deal with an infinitely complex world. Modern artificial intelligence and GPU breakthroughs enable us to finally tackle the daunting challenges of self-driving cars,” said Jen-Hsun Huang, Co-founder and CEO of NVIDIA. “NVIDIA’s GPU is central to advances in deep learning and supercomputing. We are leveraging these to create the brain of future autonomous vehicles that will be continuously alert, and eventually achieve superhuman levels of situational awareness. Autonomous cars will bring increased safety, new convenient mobility services and even beautiful urban designs – providing a powerful force for a better future.”

Accelerated systems account for more than 20% of TOP500 supercomputers

Accelerated systems, or GPU-powered systems, for the first time accounted for more than 100 on the list of the world’s 500 most powerful supercomputers. That’s a total of 143 petaflops, over one-third of the list’s total FLOPS.

Accelerated systems, or GPU-powered systems, for the first time accounted for more than 100 on the list of the world’s 500 most powerful supercomputers. That’s a total of 143 petaflops, over one-third of the list’s total FLOPS.

NVIDIA Tesla GPU-based supercomputers comprise 70 of these systems – including 23 of the 24 new systems on the list – reflecting compound annual growth of nearly 50 percent over the past five years.

There are three primary reasons accelerators are becoming increasingly adopted for high performance computing.

- Moore’s Law continues to slow, forcing the industry to find new ways to deliver computational power more efficiently.

- Hundreds of applications – including the vast majority of those most commonly used – are now GPU accelerated.

- Even modest investments in accelerators can now result in significant increases in throughput, maximising efficiency for supercomputing sites and hyperscale datacentres.

NVIDIA Jetson TX1 powers machine learning

As the first embedded computer designed to process deep neural networks, the new NVIDIA Jetson TX1 is set to enable a new wave of smart devices. Drones will evolve beyond flying by remote control to navigating through a forest for search and rescue. Security surveillance systems will be able to identify suspicious activities, not just scan crowds. Robots will be able to perform tasks customised to individuals’ habits.

As the first embedded computer designed to process deep neural networks, the new NVIDIA Jetson TX1 is set to enable a new wave of smart devices. Drones will evolve beyond flying by remote control to navigating through a forest for search and rescue. Security surveillance systems will be able to identify suspicious activities, not just scan crowds. Robots will be able to perform tasks customised to individuals’ habits.

That’s what the credit-card sized module can do. It can harness the power of machine learning to enable a new generation of smart, autonomous machines that can learn.

Deep neural networks are computer software that can learn to recognise objects or interpret information. This new approach to program computers is called machine learning and can be used to perform complex tasks such as recognising images, processing conversational speech, or analysing a room full of furniture and finding a path to navigate across it. Machine learning is a groundbreaking technology that will give autonomous devices a giant leap in capability.

NVIDIA supercharges Microsoft Azure

Virtualisation just got a little turbo charge with the introduction of NVIDIA GPU-enabled professional graphics applications and accelerated computing capabilities to the Microsoft Azure cloud platform.

Microsoft is the first to leverage NVIDIA GRID 2.0 virtualised graphics for its enterprise customers.

Businesses will have a range of graphics prowess — depending on their needs. They can deploy NVIDIA Quadro-grade professional graphics applications and accelerated computing on-premises, in the cloud through Azure, or via a hybrid of the two using both Windows and Linux virtual machines.

Giving researchers instant feedback with immersive visualisation technologies

At GTC South Asia, Monash University shared how it has leveraged GPU technology to transform the way research is done. Entelechy Asia catches up with the university’s Professor Paul Bonnington (Professor and Director of E-research […]

NVIDIA introduces Tesla K80

NVIDIA has unveiled the Tesla K80 dual-GPU accelerator designed for a wide range of machine learning, data analytics, scientific, and high performance computing (HPC) applications.

NVIDIA has unveiled the Tesla K80 dual-GPU accelerator designed for a wide range of machine learning, data analytics, scientific, and high performance computing (HPC) applications.

The Tesla K80 dual-GPU is the new flagship offering of the Tesla Accelerated Computing Platform, the leading platform for accelerating data analytics and scientific computing.

It combines the world’s fastest GPU accelerators, the widely used CUDA parallel computing model, and a comprehensive ecosystem of software developers, software vendors, and datacentre system OEMs.

NVIDIA clinches Computex Best Choice Awards for Tegra K1 and GRID

NVIDIA has done the double by snaring the Computex Best Choice Award for its NVIDIA GRID technology and the Golden Award for the NVIDIA Tegra K1 mobile processor.

NVIDIA has done the double by snaring the Computex Best Choice Award for its NVIDIA GRID technology and the Golden Award for the NVIDIA Tegra K1 mobile processor.

This is the sixth year running that NVIDIA has picked up the award, marking the longest winning streak of any international Computex exhibitor. More than 475 technology products from nearly 200 vendors competed for this year’s recognition.

Tegra K1 is a 192-core super chip, built on the NVIDIA Kepler architecture — the world’s most advanced and energy-efficient GPU. Tegra K1’s 192 fully programmable CUDA cores deliver the most advanced mobile graphics and performance, and its compute capabilities open up many new applications and experiences in fields such as computer vision, advanced imaging, speech recognition and video editing.

NVIDIA unveils first mobile supercomputer for embedded systems

Dubbed the world’s first mobile supercomputer for embedded systems, the NVIDIA® Jetson TK1 platform will enable the development of a new generation of applications that employ computer vision, image processing and real-time data processing.

Dubbed the world’s first mobile supercomputer for embedded systems, the NVIDIA® Jetson TK1 platform will enable the development of a new generation of applications that employ computer vision, image processing and real-time data processing.

It provides developers with the tools to create systems and applications that can enable robots to seamlessly navigate, physicians to perform mobile ultrasound scans, drones to avoid moving objects and cars to detect pedestrians.

With unmatched performance of 326 gigaflops – nearly three times more than any similar embedded platform – the Jetson TK1 Developer Kit includes a full C/C++ toolkit based on NVIDIA CUDA architecture, the most pervasive parallel computing platform and programming model. This makes it much easier to program than the FPGA, custom ASIC and DSP processors that are commonly used in current embedded systems.

New NVIDIA Tesla K40 speeds up supercomputing and big data analytics

The NVIDIA Tesla K40 GPU accelerator is arguably the world’s highest performance accelerator ever built. It is capable of delivering extreme performance to a wide range of scientific, engineering, high performance computing (HPC), and enterprise applications.

The NVIDIA Tesla K40 GPU accelerator is arguably the world’s highest performance accelerator ever built. It is capable of delivering extreme performance to a wide range of scientific, engineering, high performance computing (HPC), and enterprise applications.

Providing double the memory and up to 40 percent higher performance than its predecessor, the Tesla K20X GPU accelerator, and 10 times higher performance than the fastest CPU, the Tesla K40 GPU is the world’s first and highest-performance accelerator optimised for big data analytics and large-scale scientific workloads.

Featuring intelligent NVIDIA GPU Boost technology, which converts power headroom into a user-controlled performance boost, the Tesla K40 GPU accelerator enables users to unlock the untapped performance of a broad range of applications.

Beginning of the Digital Industrial Economy

Worldwide IT spending is forecast to reach US$3.8 trillion in 2014, a 3.6 percent increase from 2013, but it’s the opportunities of a digital world that have IT leaders excited, according to Gartner.

Worldwide IT spending is forecast to reach US$3.8 trillion in 2014, a 3.6 percent increase from 2013, but it’s the opportunities of a digital world that have IT leaders excited, according to Gartner.

The beginning of the Digital Industrial Economy will make every budget an IT budget; every company a technology company; every business a digital leader, and every person a technology company.

“The Digital Industrial Economy will be built on the foundations of the Nexus of Forces (which includes a confluence and integration of cloud, social collaboration, mobile and information) and the Internet of Everything by combining the physical world and the virtual,” said Peter Sondergaard, Senior Vice President of Gartner and Global Head of Research.

NVIDIA acquires PGI

NVIDIA has made further inroads into high performance computing (HPC) with the acquisition of The Portland Group (PGI), a leading independent supplier of compilers and tools. Founded in 1989, PGI has a long history of innovation […]

NVIDIA launches world’s first GPU-accelerated platform for geospatial intelligence analysts

NVIDIA has launched the NVIDIA GeoInt Accelerator, the world’s first GPU-accelerated geospatial intelligence platform to enable security analysts to find actionable insights quicker and more accurately than ever before from vast quantities of raw data, images and video.

NVIDIA has launched the NVIDIA GeoInt Accelerator, the world’s first GPU-accelerated geospatial intelligence platform to enable security analysts to find actionable insights quicker and more accurately than ever before from vast quantities of raw data, images and video.

The platform provides defence and homeland security analysts with tools that enable faster processing of high-resolution satellite imagery, facial recognition in surveillance video, combat mission planning using geographic information system (GIS) data, and object recognition in video collected by drones.

It offers a complete solution consisting of an NVIDIA Tesla GPU accelerated system, software applications for geospatial intelligence analysis, and advanced application development libraries.

Gartner adjusts worldwide IT spending projection downwards to 2% growth this year

Gartner has adjusted its worldwide IT spending forecast for 2013 downwards to US$3.7 trillion in 2013. Last quarter, the research firm predicted a 4,1 percent increase compared to 2012 but that projection has been sliced by half to 2 percent. This reduction takes into account the impact of recent fluctuations in US dollar exchange rates.

Gartner has adjusted its worldwide IT spending forecast for 2013 downwards to US$3.7 trillion in 2013. Last quarter, the research firm predicted a 4,1 percent increase compared to 2012 but that projection has been sliced by half to 2 percent. This reduction takes into account the impact of recent fluctuations in US dollar exchange rates.

The Gartner Worldwide IT Spending Forecast is the a leading indicator of major technology trends across the hardware, software, IT services and telecom markets. For more than a decade, global IT and business executives have been using these highly anticipated quarterly reports to recognise market opportunities and challenges, and base their critical business decisions on proven methodologies rather than guesswork.

“Exchange rate movements, and a reduction in our 2013 forecast for devices, account for the bulk of the downward revision of the 2013 growth,” said Richard Gordon, Managing Vice President at Gartner.

NVIDIA Tesla GPU accelerators power world’s most energy efficient supercomputer

NVIDIA Tesla GPU accelerators are powering the world’s two most energy efficient supercomputers, according to the latest Green500 list published last week.

NVIDIA Tesla GPU accelerators are powering the world’s two most energy efficient supercomputers, according to the latest Green500 list published last week.

The winning system is Eurora at CINECA, Italy’s largest supercomputing centre, in Casalecchio di Reno. Equipped with NVIDIA Kepler architecture-based GPU accelerators – the highest-performance, most efficient accelerators ever built – Eurora delivers 3,210 MFlops per watt, making it 2.6 times more energy efficient than the best system using Intel CPUs alone (at Météo France). It also greatly surpasses the most efficient Intel Xeon Phi accelerator-based system, Beacon, at the National Institute for Computational Sciences, at the University of Tennessee.

The number two system on the June 2013 Green500 list is the Aurora Tigon supercomputer at the Selex ES facilities Chieti, Italy.

Stanford University researchers build world’s largest artificial neural network

NVIDIA has collaborated with a research team at Stanford University to create the world’s largest artificial neural network built to model how the human brain learns. The network is 6.5 times bigger than the previous record-setting network developed by Google in 2012.

NVIDIA has collaborated with a research team at Stanford University to create the world’s largest artificial neural network built to model how the human brain learns. The network is 6.5 times bigger than the previous record-setting network developed by Google in 2012.

Computer-based neural networks are capable of “learning” how to model the behaviour of the brain – including recognising objects, characters, voices, and audio in the same way that humans do.

Yet creating large-scale neural networks is extremely computationally expensive. For example, Google used approximately 1,000 CPU-based servers, or 16,000 CPU cores, to develop its neural network, which taught itself to recognise cats in a series of YouTube videos. The network included 1.7 billion parameters, the virtual representation of connections between neurons.

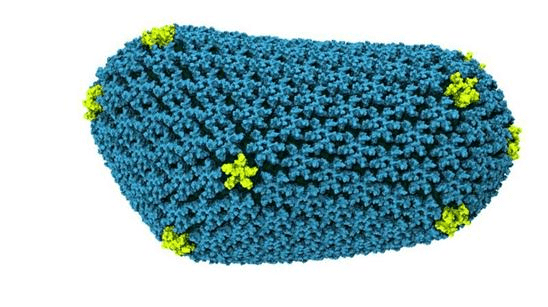

NVIDIA GPU accelerators enable HIV research breakthrough

Researchers at the University of Illinois at Urbana-Champaign (UIUC) have achieved a major breakthrough in the battle to fight the spread of the human immunodeficiency virus (HIV) using NVIDIA Tesla GPU accelerators.

Researchers at the University of Illinois at Urbana-Champaign (UIUC) have achieved a major breakthrough in the battle to fight the spread of the human immunodeficiency virus (HIV) using NVIDIA Tesla GPU accelerators.

Featured on the cover of the latest issue of Nature, the world’s most-cited interdisciplinary science journal, a new paper details how UIUC researchers collaborating with researchers at the University of Pittsburgh School of Medicine have, for the first time, determined the precise chemical structure of the HIV “capsid,” a protein shell that protects the virus’s genetic material and is a key to its virulence. Understanding this structure may hold the key to the development of new and more effective antiretroviral drugs to combat a virus that has killed an estimated 25 million people and infected 34 million more.

UIUC researchers uncovered detail about the capsid structure by running the first all-atom simulation of HIV on the Blue Waters Supercomputer. Powered by 3,000 NVIDIA Tesla K20X GPU accelerators – the highest performance, most efficient accelerators ever built – the Cray XK7 supercomputer gave researchers the computational performance to run the largest simulation ever published, involving 64 million atoms.

NVIDIA unleashes GeForce GTX TITAN

GeForce GTX TITAN is built with the same NVIDIA Kepler architecture that powers Oak Ridge National Laboratory’s newly launched Titan supercomputer, which tops the list of the Top 500 supercomputers in the world.

By harnessing the power of three GeForce GTX TITAN GPUs simultaneously in three-way SLI mode, gamers can max out every visual setting without fear of a meltdown while playing any of the most demanding PC gaming titles.

Eurora supercomputer sets world record for energy efficiency

Italy’s “Eurora” supercomputer has set a new record for data centre energy efficiency. Based on NVIDIA Tesla GPU accelerators, it is built by Eurotech and deployed at the Cineca facility in Bologna. It is Italy’s most powerful supercomputing centre, reaching 3,150 megaflops per watt of sustained performance – a mark 26 percent higher than the top system on the most recent Green500 list of the world’s most efficient supercomputers.

Italy’s “Eurora” supercomputer has set a new record for data centre energy efficiency. Based on NVIDIA Tesla GPU accelerators, it is built by Eurotech and deployed at the Cineca facility in Bologna. It is Italy’s most powerful supercomputing centre, reaching 3,150 megaflops per watt of sustained performance – a mark 26 percent higher than the top system on the most recent Green500 list of the world’s most efficient supercomputers.

Eurora achieved the record-breaking achievement by combining 128 high-performance, energy-efficient NVIDIA Tesla K20 accelerators with the Eurotech Aurora Tigon supercomputer, featuring innovative Aurora Hot Water Cooling technology, which uses direct hot water cooling on all electronic and electrical components of the HPC system.

Available to members of the Partnership for Advanced Computing in Europe (PRACE) and major Italian research entities, Eurora will enable scientists to advance research and discovery across a range of scientific disciplines, including material science, astrophysics, life sciences, and Earth sciences.

Titan is fastest supercomputer

The US now has the fastest supercomputer in the world. Running on the newly released NVIDIA Tesla K20 family of GPU accelerators, Titan is the world’s fastest supercomputer according to the TOP500 list released at the […]